In the same spirit of Tool Assisted Speedruns that showcase robotically precise "perfect" gameplay, it is easy to expect advanced Starcraft 2 AI to obliterate their opponents with ludicrous unit control and unrelenting macro play.

After all, the primary challenge of RTS games lies in the contrast between human multi-tasking limits and the thousands of possible optimizations available during a normal game. Pro players are keenly aware of this, which is why the mark of talented player is high APM (Actions Per Minute) and constant decision making. Pro players know that there is "always" something they could be doing during a game.

So, it is easy to see how the raw computational power of AI can trivialize the notion of an RTS game. A computer never has to choose between macro and micro decisions -- they simply do everything at once with perfect efficiency. Their APM and multi-tasking ability might as well be infinite.

But humans still have RTS AI outmatched thanks to our strategic decision making. Computer power doesn't matter much if, when processing thousands and thousands of decisions, the AI still chooses the wrong one. On the contrary, human players easily distinguish between a losing and winning strategy and quickly adapt their decisions with extreme accuracy.

In other words, our biggest RTS edge against AI is our ability to quickly understand what wins, what loses, what is important, and what isn't. Since 2017, the team at DeepMind have been busy trying their hardest to create AI that can overcome this human advantage.

DeepMind Starcraft 2 AI is very different than the default game AI you might be used to facing. In fact, default AI in Starcraft 2 isn't really AI at all.

Default Starcraft 2 AI is the result of a human coded system that uses rules in order to give players predictable and specific gameplay experiences. It doesn't gather information and produce novel reactions -- it is an advanced flowchart that benefits constantly from the guiding hand of a skilled programmer. It never learns and, like many of us know, is susceptible to abuse whenever a human player decides to get creative and do things in a non-standard way.

It never has the ability to "go off the rails" the human mind placed it on.

The neuroscience inspired DeepMind AI, on the other hand, is the product of deep reinforcement learning through absurds amount of human-created data. It is a learning algorithm that, outside of gaming, is helping solve some of the world's most complicated problems.

In this case, the data it has been processing comes from the largest set of anonymous game replays in existence -- a joint venture from DeepMind and Blizzard Entertainment that came alongside a series of AI building tools called PySC2.

With about a 50% win rate, DeepMind Ai demonstrated it's ability to beat the hardest difficulty Starcraft 2 AI with an "all in" style worker rush.

Since the release of all of this data, DeepMind AI learning algorithms have been able to deduce the basics rules of Starcraft 2 and, at BlizzCon 2018, we saw a glimpse of what DeepMind AI can do. It has learned how to defend itself from cannon rushes and even managed to develop standard macro strategies against human opponents.

Pretty impressive when you remember absolutely everything DeepMind AI accomplishes in Starcraft 2 is entirely self-taught. There is no coding or human-influenced flow charts going on -- just the AI's best efforts at analyzing human matches in order to figure out what Starcraft 2 even is and how to win.

On January 24, at 10:00 AM Pacific Time, we will see the next demonstration of DeepMind AI on StarCraft’s Twitch channel or DeepMind’s YouTube channel.

Will we see Terminator-like lethality in the form of brutal Zergling rushes or immaculate Marine control behind the effortless and perfect macro play? Probably not. This type of exhibition is already possible when a machines computing power is guided by a human mind via coding, but that isn't what DeepMind AI is about. In fact the DeepMind AI that will play, called AlphaStar, has it's APM artificially limited so it wont ever reach ludicrous sly inhumane control.

The value of DeepMind's demonstration lies in the self-learning algorithms on display and the ramifications its advances might have on fields of study that matter a whole lot more than Starcraft 2. Fields like science, energy, and health that each have their own share of problems DeepMind AI may be able to help solve.

So, when tuning in on January 24th, don't lose excitement if the gameplay on display isn't exactly code S level. Instead, remember that everything on display isn't even the AI's final form -- what that might look like we can hardly guess.

Well, we can maybe guess just a little. We can, for example, look at the achievements DeepMind AI has made in a game much older than Starcraft 2: the board game Go.

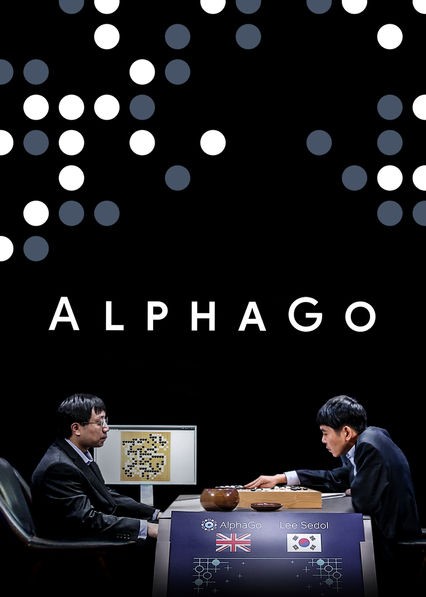

"AlphaGo" was able to pull off a historic 2016 victory against the very human champion Lee Sedol and, in doing so, achieved something AI scientist thought wouldn't be possible for at least 10 more years.

Check out the following excerpt from DeepMind.com detailing AlphaGo's monumental victory and try not to get chills:

During the games, AlphaGo played a handful of highly inventive winning moves, several of which - including move 37 in game two - were so surprising they overturned hundreds of years of received wisdom, and have since been examined extensively by players of all levels. In the course of winning, AlphaGo somehow taught the world completely new knowledge about perhaps the most studied and contemplated game in history.

If you think that is impressive, consider how the latest version of AlphaGo (AlphaGo Zero) no longer uses human data to learn how to play Go: it simply plays against itself infinitely until the rules are understood.

And guess what? This version of Alpha Go has managed to surpass all previous versions of the AI, including the version that beat Lee Sedol! Turns out that using human matches to learn Go was just slowing the AI down.

So, what lessons might the Starcraft 2 DeepMind AI eventually teach humanity? Hopefully, for the benefit of RTS players everywhere, not a more efficient way to cannon rush or 5 pool opponents. Instead, it may teach us better strategies for tackling real-life problems that abstractly resemble a Starcraft 2 game.

Problems that have to deal with hidden information, real-time strategy adaptation, long term planning, and advanced game theory. As DeepMind AI algorithmically becomes a better Starcraft 2 player, it might simultaneously become a tool humanity can use to better understand challenges beyond our normal comprehension.

-

Warcraft 3 is my one true love and I will challenge anyone to a game of Super Smash Brothers Melee.

Sort by:

Comments :0