On August 26, the 2025 Game Science Forum opened at the International Conference Hall of the National Library of Korea under the theme, “Creativity Transformed by AI, Balance Sought by the Future.” Co-hosted by the Game Science Institute and Google Korea, and sponsored by the Ministry of Culture, Sports and Tourism, the Korea Creative Content Agency (KOCCA), and the Game Culture Foundation, this is the seventh edition of the forum, which since 2018 has aimed to shape an objective, science-based discourse on games.

The first session, “The Game Industry with AI’s Technological Innovation,” featured presentations by Prof. Won-Yong Shin of Yonsei University, Do-gyun Kim, team lead at KRAFTON, and Gi-hong Na, team lead at NC AI. From global generative-AI trends to concrete, on-the-floor use cases in production, the speakers examined the changing face of the game industry in the AI era from multiple angles. The forum sought a balance between rapid AI advances and human-centered creativity while pointing toward future directions for the industry.

How the Generative AI Ecosystem Is Being Built

Won-Yong Shin, Professor in Computational Science and Engineering at Yonsei University, opened with a talk titled “Services and Case Studies for Generative AI.” He noted that generative AI is advancing rapidly around big tech, startups, and unicorns, and that text- and video-generation models are now part of the everyday digital landscape for consumers.

Discussing global competition, Prof. Shin highlighted Google’s 2025 I/O developer conference. Beyond the announcements of Gemini 2.5, Agent Mode, and Imagen 4, he said the most striking development was smart-glasses technology. Attendees who had tried the demos, he explained, reported that the glasses were surprisingly user-friendly and delivered stronger performance than expected. He also described the collaboration in which Samsung manages hardware while Gentle Monster leads design, and suggested that if commercialized, smart glasses could open up a market opportunity for Samsung on the scale of semiconductors.

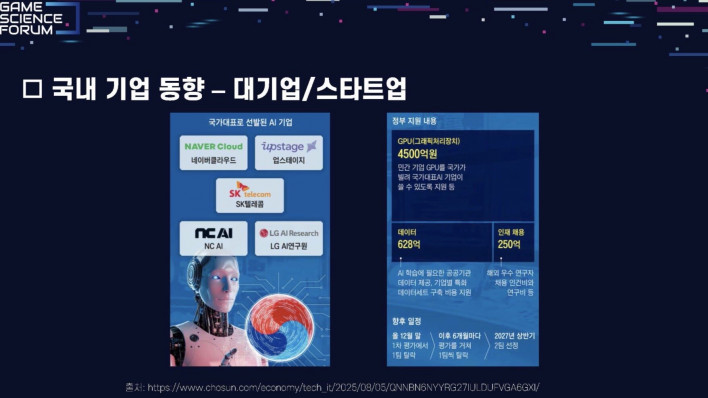

Turning to Korea’s domestic AI ecosystem, Shin pointed to the government’s “Sovereign AI” project as a defining initiative. He explained that the project is structured like a survival competition, where five current teams face periodic eliminations every six months until only three remain in 2027. He described the format as both engaging to watch and significant for Korea’s ambitions toward AI self-reliance.

On business trends, Shin underlined the importance of multimodal AI—models that learn from text, images, and other data—and private AI, which appeals to companies reluctant to expose sensitive data. Manufacturers, he observed, are especially motivated to build proprietary models rather than rely on external services.

He outlined a three-layer structure of the AI ecosystem, noting that although big tech has dominated so far, he expects the future market to shift toward demand-side companies producing their own data. Big tech will continue to lead in semiconductors, cloud, and foundation models, while startups and large enterprises will collaborate in platforms and applications.

Shin argued that multi-LLM systems are the most critical emerging trend. He stressed that whether in B2B or B2C contexts, it makes sense to combine multiple large language models rather than rely on one, both to reduce costs and to use the right tool for each situation. As examples, he pointed to a startup company that combines Llama with GPT Turbo, and KT’s Mi:dm service, which uses an internal model for in-house tasks while combining global LLMs for customer services.

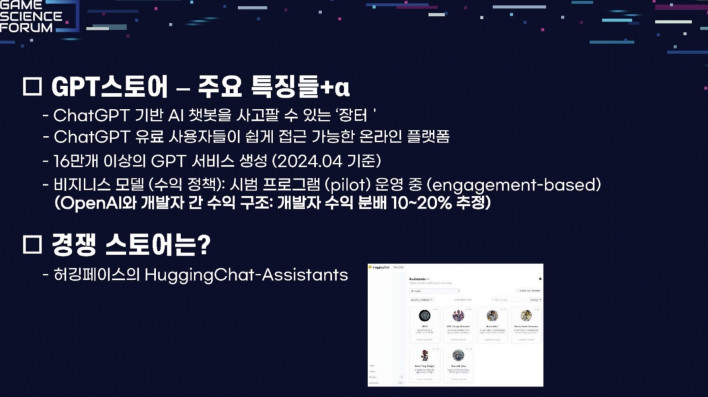

Finally, Shin highlighted the GPT Store as an expanding marketplace, noting that over 160,000 GPT services have been officially listed and developers have uploaded more than three million in total. However, he pointed out that revenue-sharing remains vague, with developers believed to receive only 10–20%, and said more transparency will be crucial to energizing the market. He summarized five key trends to watch: multi-LLM orchestration, store-based business models, on-device AI solutions, private-model B2B, and conversational BI.

Game Production in the AI-Native Era

Do-gyun Kim, Team Lead of KRAFTON’s AI Transformation Team, followed with a presentation titled “The Present and Future of Game Development in the Age of Agents.” He began by referencing a saying from Dr. Kyung-il Kim—“If you don’t live as you think, you’ll think as you live”—and then lightheartedly commented that his team takes the opposite approach, simply embedding AI more deeply into everyday work to make it indispensable. He introduced the concept of “AI-native” and observed that while terms like “AI-native” or “AI-first” are common online, they still lack consistent definitions.

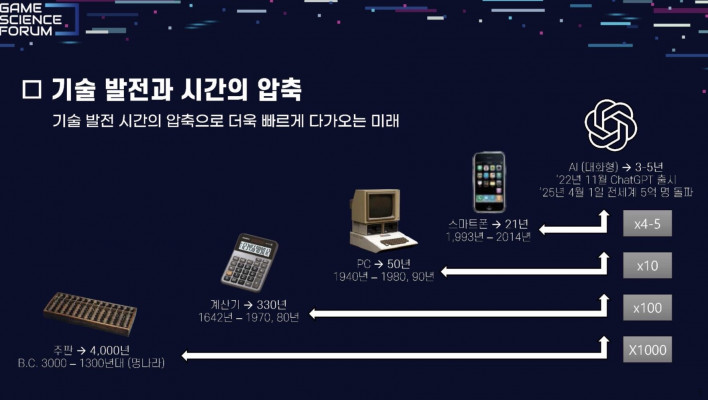

To explain the inevitability of AI adoption, Kim walked through the history of technology. The abacus took thousands of years to spread, printing several centuries, and calculators hundreds of years. By contrast, PCs spread in 50 years, smartphones in 21, and AI has achieved its current level in just 20–50 years. He argued that ignoring AI today would be like refusing to use a smartphone for a decade.

Kim then presented numbers from KRAFTON’s research: in 2024, about 52% of studios reported using AI in production, while in 2025 nearly 96% of developers said they were using AI tools. He explained that the game industry is particularly compatible with AI because of its labor-intensive workflows, fully digital nature, and high complexity.

He described a four-stage framework for AI adoption in game development. The first stage is the traditional, AI-free pipeline. The second is the current phase, where individuals use AI tools to boost efficiency and expand their roles. The third stage is when AI agents take over specific tasks within workflows, and the fourth is when agents handle entire workflows end-to-end. With many agent tools entering the market, he predicted that transitions to the third and fourth stages are near.

Kim cited KRAFTON’s own results, saying that art tasks once taking 16 hours can now be completed in one, and that UI can now be generated without outside support. The team also uses AI tools for code refactoring, quick coding in unfamiliar languages, narrative design, and event generation. They have built standardized workflows across concept art, coding, balancing, and narrative design to ensure consistent quality.

He explained that AI agents work through natural-language instructions, interpreting user requests and applying the necessary tools or knowledge to complete tasks. Looking ahead, he suggested that multi-agent systems could allow specialized agents in areas like narrative, coding, art, and 3D modeling to collaborate on complex projects.

Kim proposed a division of responsibilities: humans would continue to provide vision, creativity, decision-making, consensus-building, and ethical judgment, while agents would focus on large-scale data handling, repetitive tasks, prototyping, and technical problem-solving. This shift, he argued, will usher in an era where anyone with creativity can produce games.

He concluded by citing OECD data showing that as AI agents handle more work, people will gain more leisure time. This, he argued, is a direct boon to the game industry: as leisure expands, so too does consumer demand for games. In his view, AI will drive this change and help games grow as a cultural and economic force.

How Generative AI Will Change Game Production

The final speaker, Gi-hong Na of NC AI, asked whether generative AI could fundamentally change the paradigm of game development and expand human creativity.

Na shared the latest adoption statistics, noting that while in 2024 about 62% of global game companies reported using AI, by April 2025 that number had risen to 96%. He pointed out that adoption is now unavoidable, with media also reporting that about 95% of developers are using AI tools. Large studios tend to integrate AI into established pipelines, he said, while indie developers usually export results from external tools into their projects.

He emphasized that while some AI technologies are already embedded in released games, others are still in early stages and are primarily used for planning or communication support. He argued that overly complex, “end-to-end” tools that try to handle everything are often avoided in practice because they are difficult to control and offer limited immediate benefit.

According to Na, the most effective tools are those that reduce friction in areas developers find most challenging. Once those pain points are removed, creators quickly feel the benefit and often expand their ambitions, which naturally boosts creativity. He described these as tools that take care of “tedious but high-impact” work.

Na highlighted NC AI’s specific projects. For lip-sync automation, he said that high-quality modern games now require even supporting characters to have natural facial expressions and accurate mouth movements. AI now makes that feasible, and demonstrations showed clear differences compared to traditional methods. He also pointed to an AI-driven animation search tool that allows developers to easily retrieve and reuse existing assets—for example, quickly finding a clip of a knight firing a shot.

He demonstrated speech synthesis technology by showing how a researcher’s recorded line could be converted into an Orc character’s voice, eliminating the need for additional recording sessions and speeding up iteration. This, he argued, reduces cost and effort while encouraging faster, more creative work.

Na also acknowledged skepticism about over-automation, noting that although kiosks with conversational digital humans might showcase technical sophistication, they often fail to match user expectations. For this reason, NC AI is prioritizing solutions that directly ease developers’ workflow frustrations, freeing them to focus more on creative work.

He described a practical approach to image generation: many users find that after generating images, attempts to refine a promising output often yield unrelated results. NC AI addresses this with inpainting-style editing that allows adjustments to be made directly to the generated output.

Finally, Na discussed translation technology, saying that while raw translation quality is generally high across the industry, game localization requires special attention to mood and tone. NC AI focuses on methods that preserve the game’s atmosphere even when the wording remains the same. He concluded by emphasizing that AI’s purpose should not be to reduce creators to passive roles, but to enable more energetic and expansive creative activity—envisioning a future where everyone has the opportunity to become a creator.

This article was translated from the original that appeared on INVEN.

![]()

- Seungjin "Looa" Kang

- Email : Looa@inven.co.kr

Sort by:

Comments :0